#2025.12.14 The Real Risk of AI Coding Isn’t Hallucinations, It’s Verification Debt

Verification Debt, Precision Specs, Human-to-Human Code Reviews

Today’s post is a deep dive into the AWS re:Invent 2025 keynote by Dr. Werner Vogels.

AI is currently driving more change in software development than anything we’ve seen in years. It’s often framed as a threat to developer jobs, even the end of programming. I don’t believe that, and the keynote opens with a useful reminder of why.

Werner starts with a short video highlighting past “end of developer” moments: assemblers, compilers, structured programming, object-oriented design, the shift from monoliths to services, and the move from on-premises to cloud computing. Each change reshaped how software is built. Each expanded the role of developers. And each time, the number of developers continued to grow. There are nearly a million more of us compared to last year.

The real question raised is the one most developers are already asking:

Will AI make me obsolete?

Werner’s answer is simple:

No (if you evolve).

Here are my three key takeaways on what that evolution looks like.

1. Verification Debt Is the Real Risk

Hallucinations are often cited as the biggest problem with AI-generated code. They are an issue, but they’re no longer the most dangerous one.

The bigger risk is verification debt.

AI makes code generation fast and easy. That speed creates a new failure mode: you can move forward because output looks reasonable and appears to work. Over time, unchecked assumptions, missed edge cases, and misunderstood intent quietly accumulate.

Vibe coding without human review doesn’t support innovation. It’s gambling.

The problem isn’t that AI gets things wrong.

It’s that you stop checking carefully enough.

Challenge: Where in your workflow is verification explicitly enforced, rather than assumed?

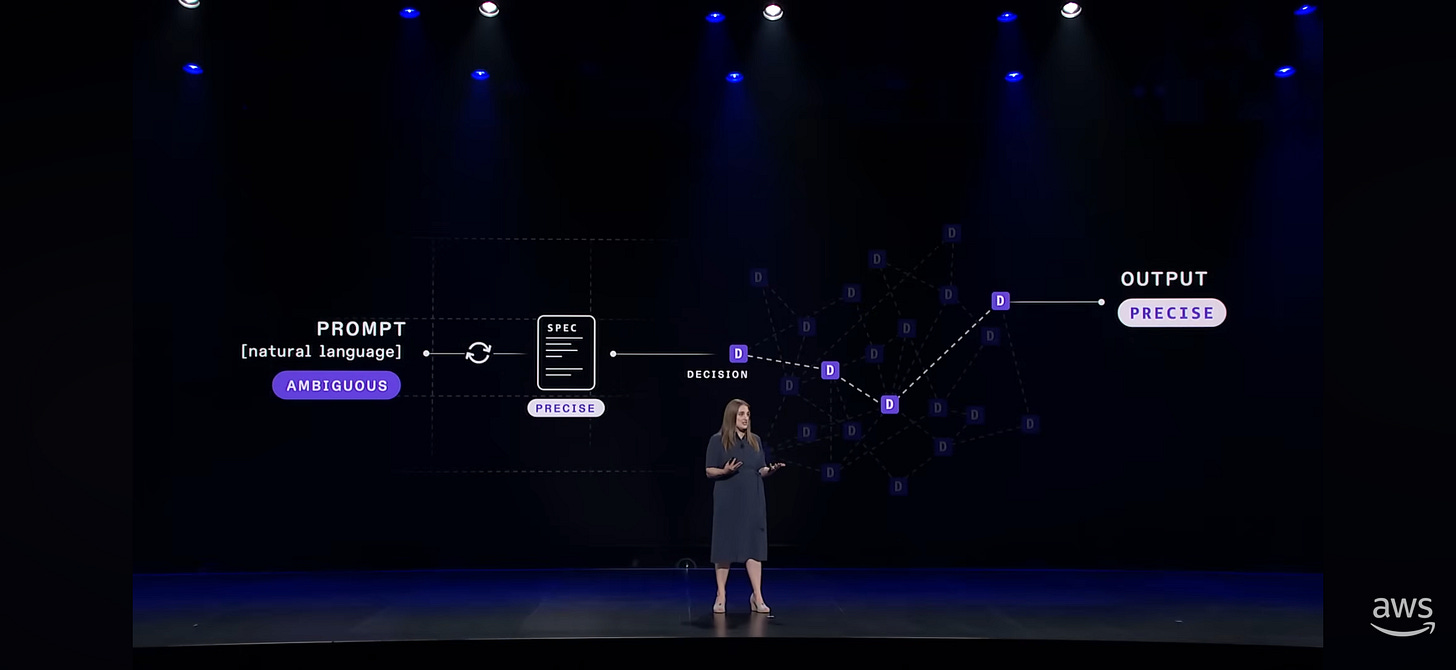

2. Specs Enable Precision, Not Bureaucracy

One of the most interesting parts of the keynote was the focus on structured intermediate steps. The Kiro standard specs: requirements, designs, and tasks.

I’ve been testing this approach myself, and it holds up well in smaller “hobby projects” and PoCs.

These intermediate non-coding steps aren’t overhead. They save time. They help refine the problem by allowing verification at a higher level. Enabling control and review before getting lost in the details of code.

It’s far easier to verify the intermediate spec than the code. So it’s worth creating and refining this as you go.

Challenge: Where do you deliberately pause to review specs and design before the code is even created?

3. Human-to-Human Code Reviews Still Matter

AI is excellent for learning, exploration, and planning. Tailored explanations, instant answers, and the freedom to ask “dumb questions” make it a powerful tool for individual productivity.

But it doesn’t replace human-to-human code review.

Seeing how other developers think, structure problems, and use AI in real workflows remains one of the fastest ways to improve. I was reminded of this again recently during an internal AI community-of-practice session — the real value came from developers showing how they work, not from the tools themselves.

AI accelerates individuals. Humans still level up teams.

Challenge: Ask a colleague to walk you through how they’re actually using AI today. Not the theory, but the practice.

Have pride in your work

Most of what we build nobody will ever see.

The best builders do the things properly, even when no one is watching.

Have pride in your work

AI doesn’t change that. It’s another tool to master.

Have a great week.

Excellent analysis! I've been thinking about this exact problem with verification debt myself. What strategies do you see for mitigating it efectively?