Thoughts For The Week - 2025.09.28

The Dunning-Kruger effect, Managers vs Leaders and How To Tell If Something is AI Written

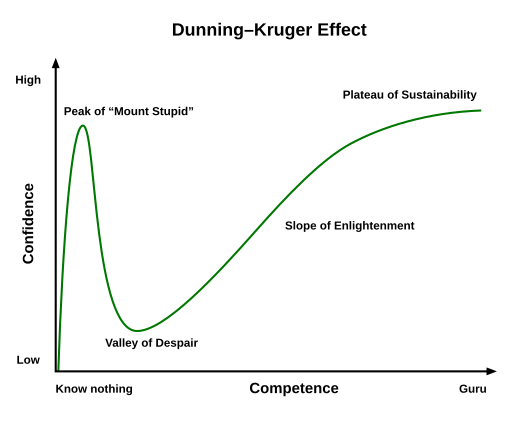

1. The Dunning-Kruger effect

People who are new to a topic or have a low ability often overestimate their competence. They simply don’t know what they don’t know. Where as experts can be more cautious because they understand the full scope and scale of a topic.

Incompetence Breeds Overconfidence

When you don’t know enough about a topic, you also don’t know what you don’t know. That ignorance can lead you to overestimate your abilities.Expertise Breeds Doubt

The more you learn, the more you see how vast the subject is—and how much you still have to master. This can lead even highly competent individuals to underestimate their expertise.

Watch out when you're new to something. Ask more questions to try and find out what you don’t know.

2. Managers vs Leaders

It’s a pattern at so many organizations: when the heat is on, people either retreat into management mode or step up into leadership. The difference isn’t about job titles or org charts—it’s about fundamental choices in how we treat people when it matters most.

Simon Sinek’s post lists 5 things that Managers do but a leader never would:

Managers Hoard Information. Leaders Overshare.

Managers Weaponize Policy. Leaders Bend Rules for People.

Managers “Fire Fast.” Leaders Coach, Then Help People Land Softly.

Managers Avoid Hard Conversations. Leaders Run Toward Them.

Managers Reward Compliance. Leaders Reward Dissent.

3. How To Tell If Something is AI Written

You’ve probably noticed a few words that stand out and make you think something was AI written, eg fostering, leveraging or empowering. Hollis Robbins’ post gives some more generic rules and ways to spot AI written text.

Humans naturally link signifiers (words) with signifieds (mental concepts, lived experiences, shared cultural meaning). LLMs produce text by predicting the next most likely word based on what’s come before. This means that the model never “grasps” the underlying concept.

🔎 Humans vs. LLMs: Signifiers and Signifieds

Signifier (the word or symbol)

Humans: Hear or read the word “dog.”

LLMs: Process the token “dog.”

Signified (the concept/meaning)

Humans: Immediately link it to the mental concept of a furry animal, personal memories of dogs, maybe even the smell of wet fur.

LLMs: No concept. Only knows that “dog” statistically appears near “bark,” “pet,” “walk,” etc.

Connection

Humans: Signifiers are grounded in lived experience, perception, culture, and embodiment.

LLMs: Signifiers are connected only to other signifiers in the training data.

Process

Humans: Interpret meaning → integrate with knowledge → respond with intention.

LLMs: Predict the next token via autoregression (probability distribution over possible continuations).

Example sentence

Humans: “My neighbour’s dog barked all night, so I couldn’t sleep.” → I visualise the neighbour, recall barking, feel annoyance.

LLMs: “My neighbour’s dog barked all night…” → high probability that “so I couldn’t sleep” or similar comes next, but no felt annoyance.

So here’s a handy rule: if you can’t see anything, if nothing springs to mind, it’s probably AI.

Telltale Contrasting Structures

Another rule: look for formulations like “it’s not just X, but also Y” or “rather than A, we should focus on B.” This structure is a form of computational hedging. Because an LLM only knows the relationships between words, not between words and the world, it wants to avoid falsifiable claims. (I’m saying want here as a joke but it helps to see LLMs as wafflers.) By being all balance-y it can sound comprehensive without committing to anything.

Have a great week.